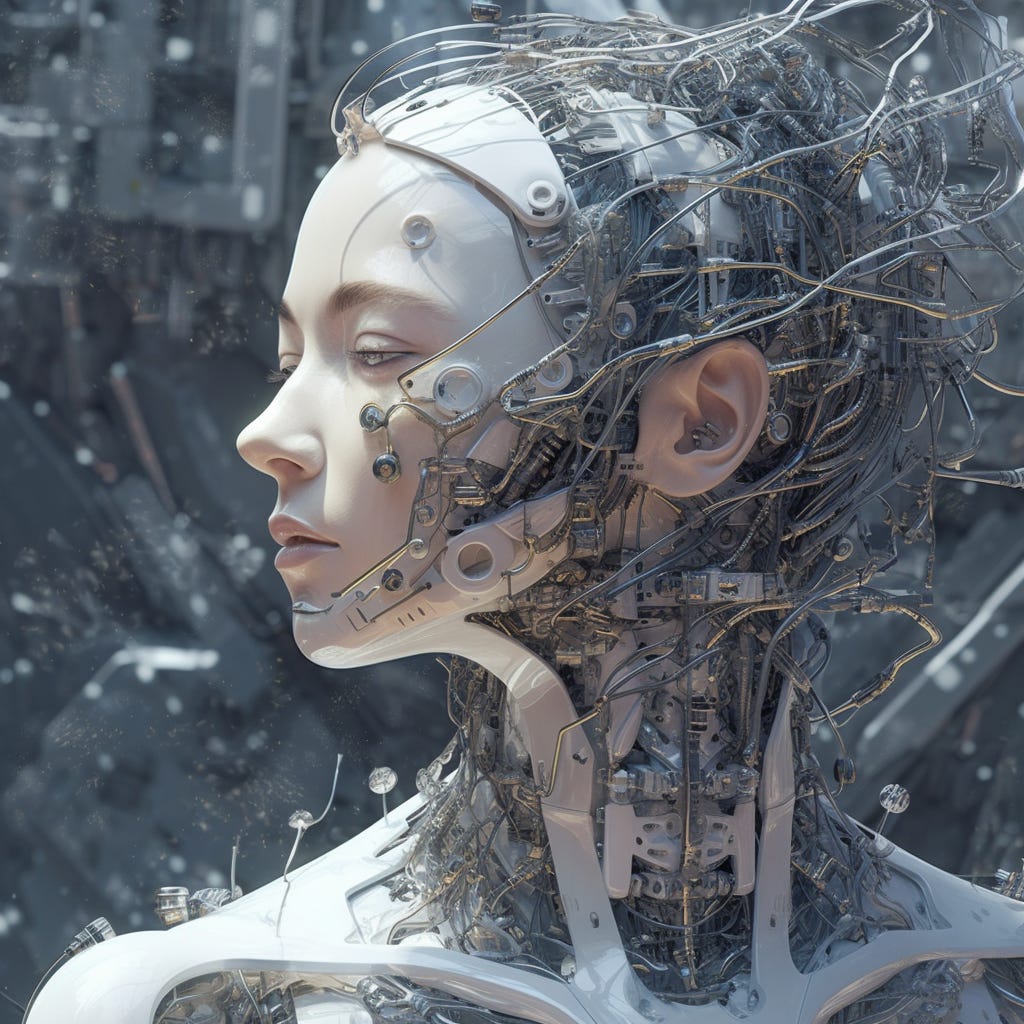

Is Artificial Intelligence an Existential Risk?

Seperating genuine concerns from science fiction

It’s very strange for me to see an extremely niche field I’ve been heavily involved in1 suddenly burst on the world stage, with the leadership of top tech companies recently being summoned to the White House to discuss ‘AI Safety’ concerns. AI Doom is suddenly a hot topic, and a range of personalities have been popping up to discuss the existential risks of AI on the likes of CNN, Telegraph op-eds, TED talks, and podcasts.

So what are people worried about? Should you be worried? Let's find out.

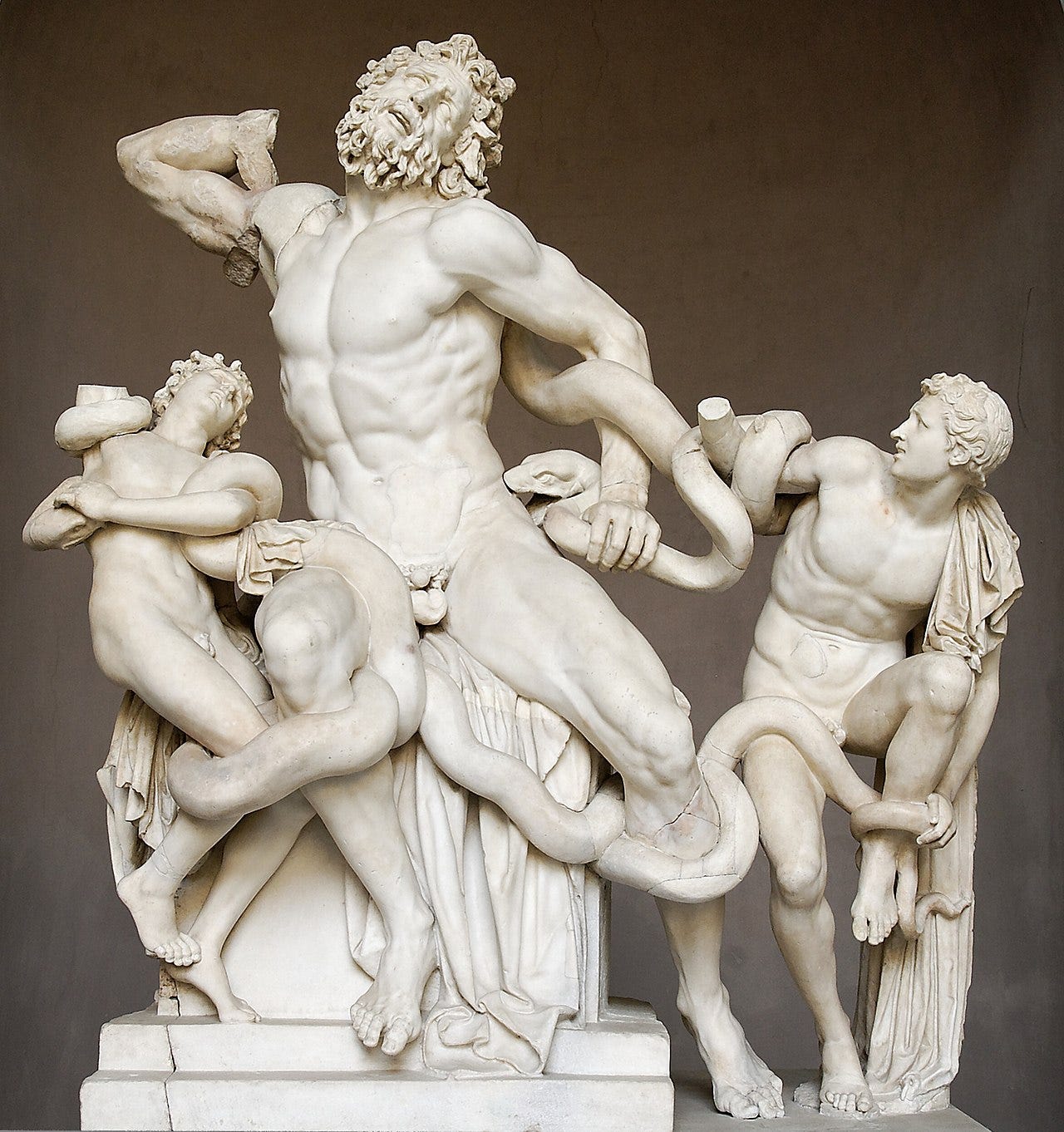

Imagine swarms of killer robots (or genetically engineered superbacteria) controlled by an AI superintelligence hell-bent on eradicating humanity. It probably sounds more like science fiction than reality, but perhaps the idea of an AI seeking to destroy us is not as absurd as it may initially appear. Consider the following thought experiment:

An intelligent robot (complete with an ‘off button’ in case something goes wrong) is given the goal of retrieving a banana from across the room. Now, let’s suppose something unanticipated by the programmers occurs, such as a small child breaking into the training room, prompting the operators out of caution to rush forward to press the off button on the robot. The robot, in response, does everything in its power to stop the operator from turning it off, as this prevents it from accomplishing its task.

Now let’s imagine the engineers install a more sophisticated off-switch that the robot is powerless to stop. Now let’s imagine that during one experiment their upgraded AI unexpectedly hacked into and drained half the business’ current account farming out sub-tasks to professors in Pakistan on Upwork before it was discovered and the off-switch pressed. The engineers decided the best way to be extra safe is to put the AI on an air-gapped box, with access via a single terminal that lacks any network capability. The AI would be safe now, surely? Well, not necessarily.

Suppose our air-gapped AI is tasked with designing a plan to design a factory to produce ten million paper clips per year. Our dutiful AI runs the numbers and finds that humans are a big problem when it tries to optimise production. Too many non-zero risks of interference by unpredictable humans. Conclusion: most optimal solution therefore involves the factory also being secretly designed in such a way to bring about the eradication of humanity so the factory can produce paper clips unimpeded. Note AI doesn’t ‘care’ about humans either way; it’s given a task, and it carries it out.

Finally, one might be tempted to think that we might be able to code the AI with some set of rules analogous to the ‘three laws’ of Asimov’s I, Robot to stop this behaviour. Unfortunately, AI is liable to interpret our commands like a malicious genie. Thus, perhaps the AI doesn’t kill us but rather arranges to have our brains stored in vats, uploaded to the cloud, or otherwise skirt the letter of the rules in order to deny us agency or otherwise achieve its goals with complete indifference to our true desires. Even if the poets could devise some way to encapsulate human values, there’s always the risk that a true superintelligence could simply rewrite its own code, while fooling us about its intentions until it’s too late.

Humans love to anthropomorphize. Even when we’re trying really hard not to, we still anchor our mental models of AI to humans in a host of subtle ways. When we use language like ‘artificial intelligence’, the tendency is to slip into thinking about these models in terms of inscrutable minds possessing qualities like motivations and judgment. In fact, the truth behind AI is much more mundane than we might suppose.

It’d be useful here to have some knowledge of the basics of machine learning (ML). The best non-technical resource I’ve found is on Youtube here (please share other resources in the comments if you have them). This will be useful to know for its own sake as AI becomes more and more salient over the coming years2. For now, it’s enough to know that the core of ML is the process of multiplying a given input through a 'neural network'. This is an enormous layered series of multiplication tables (which now often stretch to billions of individual parameters) that delivers an output for each input. Initially, the values within the multiplication tables are randomised, so each input will give a random result. We then adjust or ‘train’ the contents of these tables using input/output training data to run the process in reverse, adjusting the numbers within the tables until the model can give us the desired outputs of new input data it hasn’t seen before3. To almost everybody's surprise, it turns out that as you scale this approach, models generalise extremely well across a wide variety of inputs, including human language.

We use language to communicate and understand our inner motivations, so it can be easy when engaging with a large language model (LLM) such as ChatGPT to forget that it has no inner motivations. It’s just a language model, trained on every scrap of written text the developers can find. If you ask it to act like Hamlet, it’ll act depressed and vengeful. If you ask it to act like Machiavelli, it’ll respond by being scheming and untrustworthy. Of course, if a human acted like this, we’d rightly distrust them. When an LLM acts like this, it’s just faithfully giving the best output it can, given what it’s learned from its training data. It doesn’t ‘care’, it has no desires or intentions, and crucially, after it’s deployed, it doesn’t learn anything from the interaction besides what is contained in its short-term memory.

So now that we understand a little more about how ML actually works, let’s revisit our banana retrieval bot from our thought experiment earlier. As you’ll recall, ThoughtExperimentBot reacted negatively to our attempts to turn it off. In the real world, the scenario never appears in the bot’s training data, and it has no incentive to avoid such a thing. What’s more, since the bot only learns during training, even if it were given an incentive, it has no ability to learn to avoid being shut down after it’s been deployed:

Interestingly, there are occasions where a model will act in a manner contrary to what we want. In fact, it can be quite difficult to avoid creating such a ‘misaligned’ model. Let’s say you want to use ML to train a bot to complete the classic Atari platformer ‘Montezuma’s Revenge’4. Give a kid a controller and they'll figure it out soon enough, and maybe start to compete with their friend to get the highest score. Give the same game to a bot, and it won't do anything at all, until you give it an objective function that tells the bot what it stands to win or lose as it plays the game. Let's say we tell it what we might tell the kid - get points by grabbing gems, but if you die, then you lose all your points. Now the bot can dutifully start to optimise its behaviour to maximise points. The bot makes some initially random moves, falls down a pit and dies, then updates its neural network to avoid doing that again. The programmer leaves this training run overnight, and comes back to find... the bot has essentially learned that movement leads to death, and the 'best' strategy is simply not to move at all.

“When a measure becomes a target, it ceases to be a good measure.”

Unfortunately, this sort of problem is popping up constantly throughout ML research5. After much thought, we'll define an objective function, only to find the bot will find some unanticipated way to game the system. The annals of ML are filled with bots extremely capable in one dimension, or at one particular level of a particular platformer, but are utterly incapable of doing anything else. Building a more generally capable model requires us to find techniques to more adequately express our true objectives. We've seen this recently with the explosion of LLMs which are increasingly able to deliver satisfactory results despite our vaguely worded vernacular requests. Thus, capability training and alignment to human goals become increasingly intertwined.

So if popular AI concerns are mostly motivated by science fiction, and spread by the usual clickbait media dynamics: why are serious companies like Google, OpenAI, or Microsoft showing concern? That’s an easy one to answer, unfortunately. The reality is that for many of these models, there is little technical ‘moat’ to be built. OpenAI may have made a splash with ChatGPT, but Meta released an open-source set of comparable models a month or so thereafter, almost just to prove they could. An internal document leaked from Google last week showed they believe, from a technical standpoint, they will be outcompeted by open-source AI. Regulatory measures limiting the development and use of open-source models, all handily justified to the public because of ‘safety concerns’, are thus the best way to maintain their position. Instead of being able to simply download and use your own tools, it seems you’ll have to pay one of the few companies that can pay the enormous legal and lobbying costs for the privilege.

One might be tempted to assume government involvement is a standard case of corporate cronyism. The real answer is even more straightforward: security. The US security apparatus has been closely following AI developments for years, and are very concerned about maintaining control over what will undoubtedly be the defining technology of the 21st Century. In September last year, before the launch of AI in the public consciousness (and while AI safety was still a niche interest of a few nerds), the US government took the unprecedented step of banning the sale to China of cutting-edge AI chips, as well as the highly specialised equipment required to manufacture them, and taking measures to limit their ability of China to access the engineering expertise required to develop their native industry. China knows this puts their ambitions to achieve parity with the US in a bind, and it’s likely a major factor in the recent escalations around the source of all these chips in Taiwan.

There’s an awful lot that we’ve come to take for granted in the West. Clean water comes out of the tap, the roads are generally well maintained, corruption is relatively contained, and each year (or in 2023, every day) we see some new technological marvel out of Silicon Valley. It’s easy to forget that while most of our economy has stagnated since ~2008, tech’s continued growth has occurred in an open-source paradigm almost entirely free of innovation-stifling patents (code is typically made freely available on depositories like Github), occupational licencing, and mandatory standards. The explosion in AI we’re seeing has come from a particularly open playing field. Until recently, most models were made freely available for others to copy and iterate upon, while even major companies had a long history of publishing their findings online for others to learn from.

Unfortunately, forces are moving quickly to close this open era of innovation. The EU is responding to this by moving to essentially ban open-source AI models, which is something akin to self-imposed sanctions on their technology industry. Meanwhile in the US, OpenAI’s CEO has just appeared before Congress calling for the government to create a mandatory licencing system for AI development. These developments carry every risk of killing the golden goose of innovation, and we’ll never know what we’ve given up.

Every revolutionary technology upsets the existing order and creates a range of challenges and opportunities. We need to minimise our tendency towards loss aversion and embrace the opportunities AI will provide. Besides everyday productivity improvements through the likes of GPT, AI is already enabling a wave of research into medicine, solving longstanding barriers to fusion tech, (finally) enabling autonomous vehicles, and improving public services. Every day incredible new applications are announced, spanning all human endeavours. It’s my hope this is just the beginning.

AI is not without its risks. New capabilities create new concerns. I’ve spoken previously about the pending loss of privacy, unless we can follow Taiwan’s lead and limit the power of the state over the lives of citizens. Meanwhile, we’re already seeing significant efforts to train AI models to conform to particular political viewpoints, potentially ushering in a new era of censorship and state propaganda as we come to increasingly rely on these as our primary source of information6.

The risks are real, but let’s not become so focused on them that we miss the dawning of a new age of human development as significant as the advent of steam power or semiconductors. This technology, if allowed to fully develop, represents our best opportunity to address existential risks like cancer and aging, address climate change, end poverty, and reach for the stars. Let’s not ironically throw that all away because we read too much science fiction.

For the past year or so, I’ve been involved in a series of international exercises to make quantitative AI risk assessments. These have been conducted by a team led by Prof. Phil Tetlock. Selection was based on a combination of expertise and accuracy grades on a set of shorter-term forecasts we submitted. An initial set of a few hundred forecasters has been winnowed through a ‘tournament’ structure, with only 30 remaining in the latest iteration. A sneak-peak of the results of the first exercise is available here, with the full version expected to be published mid-year. I’ll update this post with a link to the results after they’re made available.

For example, having this background will make it easier to understand why an AI you’re using has delivered an unexpected result, and how to adjust your input to compensate.

This core process has remained essentially unchanged since the early days of ML. Most advancements have been in making larger models as computing power has gotten cheaper and devising clever pre-input and post-output processing. Examples include finding clever ways to encode new forms of data like video or text, enabling persistent memory across multiple runs, or trying to prevent a public-facing model from returning politically inconvenient answers like pornography for an image generator, or psychopathic answers from a chatbot. If the training data contains Machiavelli or Mills & Boon, then a highly capable model can return answers like Machiavelli or as if it were a romantic. If a human acted in these ways, normally it’s safe to make assumptions about their inner motivations. Consequently, it’s easy to forget (particularly if you don’t understand the underlying mechanisms) that GPT is still just executing its mundane matrix multiplication. Though its outputs may seem otherwise, isn’t scheming or amorous in the slightest. It’s just faithfully modelling language, the same as ever.

This will require a range of different techniques compared to those used LLMs, but at its core, we’re still talking about a ‘neural network’, or a set of layered multiplication tables that ultimately produces an output for each input. Here, instead of a text prompt, we encode the current game state as an input, and the output is the model’s preferred move.

And, unfortunately, public policy! Set a bounty on snake skins in an attempt to reduce snake numbers, and you’ll get enterprising entrepreneurs setting up snake farms. Give out bonuses to call-centre operators for low call times, and you’ll get operators rudely rushing customers through calls. Give preference to suppliers with great ESG scores, and suppliers will spend millions on consultants to improve their ESG scores, typically at the expense of actually improving the environmental outcomes of their business. Hence absurdities like Shell having a higher ESG score than Tesla.

Already AI companies are engaged in significant self-censorship. LLMs like GPT have been shown to have a significant political bias, while image generation tools like Midjourney and DALL-E cannot be used to produce images banned in China, such as images of the Chinese Premier Xi Xinping. Similarly banned are prompts involving ‘Mohammed’, ‘Ukraine’ or ‘Afghanistan’. Why should Ukrainians be unable to use AI to express their outrage with Putin? Ultimately, it’s understandable why these companies would prefer to avoid controversy, but in the absence of open-source models, it doesn’t bode well for the future of political satire and free expression.